I’ve seen numerous demos of Microsoft's Parallel Framework Extensions ( Pfx), and as you would expect they focus on showing how easy it is to make sequential code go faster on multiple cores. Whilst there is no disputing that the code that is run certainly scales as you add new processors, you can’t get away from the fact that a lot of these demos are contrived, heavy use of Thread.SpinWait to simulate lots of computation for each parallel task. Take away the SpinWait and do some simple one line piece of computation and you will almost certainly end up with a far worse performance than you had with sequential approach. The reason being that creating , scheduling and correlating results is not free. So when deciding to utilise Pfx you need to ensure that you each piece of parallel activity is sufficient in size to benefit from the additional cores. This is were the problem lies whilst its easy to drop in a Thread.SpinWait for demo purposes its often not easy to take your piece of real world computation you wish to parallelise and make each piece parallel activity be of significant size.

One demo I’ve seen over and over again is summing the nodes of a binary tree. The sequential version looks like this

private static int SumTree(Node root)

{

return root.Value + (root.Left == null ? 0 : SumTree(root.Left)) + (root.Right == null ? 0 : SumTree(root.Right));

}

This could then be parallelised like so

private static int ParallelSumTree(Node root)

{

int lhsTotal = 0;

int rhsTotal = 0;

Future<int> lhs = Future<int>.Create(() => root.Left == null ? 0 : ParallelSumTree(root.Left));

Future<int> rhs = Future<int>.Create(() => root.Right == null ? 0 :ParallelSumTree(root.Right));

lhsTotal = lhs.Value;

rhsTotal = rhs.Value;

return root.Value + lhsTotal + rhsTotal;

}

Future<T> is a Pfx type that is used to create a parallel task that yields a result in this case an int. The instance of Future<T> has a Value property that you can use to get the result of the parallel task. If you request the Value and the task has not yet completed you block waiting for the result. I personally really like this model but they have scrapped this type in .NET 4.0 release of Pfx and have the concept that a parallel task can return a value as opposed to void.

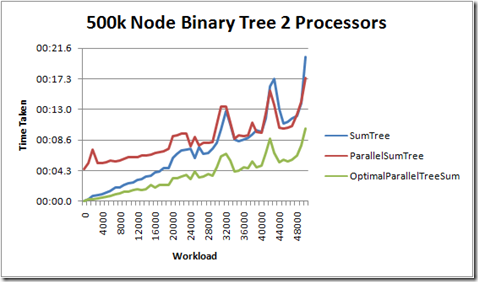

This form of parallelisation can work if the computation of both of the futures takes a reasonable time. To demonstrate this I have an implementation that allows the amount of computation to vary, utilising a for…loop. I varied the length of the loop from 0 to 50,000 iterations. With the case of 0 iterations simulating the real task in hand to sum all nodes in the binary tree, and 50,000 to simulate a more expensive operation to perform on each node. I ran the code over a binary tree of 500k nodes with 2 cpus.

What the result shows is that you need to have a workload equivalent to 42k loop iterations for the parallel version to be faster, but note not twice as fast. I then ran the same piece of code on a 8 core machine, here the point in which the parallel version was more effective was around 10k loop iteration mark. This shows that the size of the workload to be effective for parallelisation is also a function of the number of available cores. Today you may well rule out a parallel variant of an algorithm as it doesn’t offer a speed up, whilst in the future when we have say 32+ cores it may well do so, which is why the MS guys are keen to advocate you build for future concurrency not necessarily what you have today. However we obviously don’t want to have a slower parallel version today for the possibility of some future benefit. The reason for this speed up as we add more cores means we run more code in parallel this is not only our algorithm but also the task creation code.

The eight processor version is a lot more compelling than the two processor version, however I'm not comfortable with this, as the speed up I’m getting is not close to 8x speed up which is what the ideal case would be, in fact it is at best about 4 times. In some cases it may be reasonable to accept this, as the effort to make the 4x speed up was very little work, and if we wait a while until we have say 32 procs we will continue to get a speed up just in the same way we did in the past with greater clock speed.

If you do want to squeeze every last drop out of the machine then you will need to think of ways to optimise the algorithm so that the overhead of parallelisation is reduced. In this case its about not having to manage lots of separate tasks. For this algorithm a couple of things stand out that will help here.

- The tree is perfectly balanced

- The computation for each node is identical

We can take advantage of these two facts along with the knowledge of how many cores we currently have available, and simply divide the tree up between all the cores. That way we take minimal hit for task creation, and each core can run flat out on its given piece of work, and since all work items are equal we would expect all cores to be utilised the same. The two graphs below show this new implementation which has a far better performance.

The two above facts about the tree made producing an efficient parallel implementation reasonably easy. If you had a unbalanced tree it may be worth balancing it or producing a series of other containers that contain the collection of nodes spread evenly. For this to be effective the computational work has to be of greater than the cost of scanning the tree. Alternatively a more dynamic approach could be undertaken were by the algorithm kept track of how many cores it was utilising and when it detected full utilisation it keeps all new activities on the same core as part of the same task. It is often worth considering parallelisation when deciding how to structure your data.

Conclusion

Parallel.For and use of lots of tasks will not necessarily make your code go faster, it may well go slower. The piece of work being parallelised must be of sufficient size to benefit from the overhead of parallel framework and finding that piece of work is often not as easy as you might expect. Going forward as machines have greater and greater number of cpu’s that size of computation necessary to take advantage of the framework will get less. However this will not always result in the most effective us of the cores. If you want to take full advantage of the cores to get maximum performance you will need to structure the algorithm so that understands the environment that it is running in, in order to best utilise resources, and it is this part that often requires the hard thinking. So is the free lunch back? Well to some degree if you want an ok lunch, but for a gut busting lunch you will still have to work for it.

2 comments:

Interesting, this is certainly a rather higher cost requirement than I thought would be necessary to make PFX worthwhile. Then again, that would also be an area that is likely to improve a lot while PFX matures for 4.0. Have you done any 4.0 tests yet?

I've not played with 4.0 yet, waiting for the 2010 Beta, things certainly seem to be improving all the time in the Pfx space, first release was dire, June CTP is something you can start to take more seriously. Good idea about trying 4.0, when I get chance I'll re-run the code against 4.0 and publish the results.

Post a Comment