Whilst teaching Developmentors "Code Smarter with Design Patterns in .NET" class a student pointed me towards this Gang of Four patterns crossword puzzle. To test your GOF patterns click here Enjoy!

Andy's observations as he continues to attempt to know all that is .NET...

Thursday, December 18, 2008

Sql Server 2008 and TransactionScope just got better

Its hard to deny that the TransactionScope programming model created in .NET 2.0 was pretty cool. Initial feelings when I saw it was wow I can now place transactional directives in the business layer and have everything inside that block right down to the data layer enlist in the transaction. However disappointment was only just around the corner when you saw that multiple Open/Close pairs inside the same transaction caused the transaction to be promoted to the DTC ( see previous post ) event though you were talking to the same database instance.

There have been many solutions to this problem, all effectively resulting in changing the way your data layer works to maintain an open connection across multiple data access layer calls. My implementation can be found here, and Alazel Acheson's here

The good news is that this is no longer needed as of Sql Server 2008, the transaction is only promoted when you do indeed involve multiple resources.

Wednesday, November 19, 2008

Power point to Microsoft One Note

I often find the need to produce a one note book of a set of slides so that I can annotate and scribble notes against a given slide. In the past Ive imported the slides manually into a one note book, I finally got around to building a tool that automates the process. You can download it from http://www.beaconsoft.co.uk

Tuesday, October 28, 2008

PDC 2008, Day one

This is my first PDC, so not sure what to expect in terms of quality of speakers and content. Key note on cloud services the new windows platform Windows Azure was a little disappointing based on the fact that it was a developer audience. In essence Microsoft are providing a platform for other companies to take advantage of large distributed data centres. Microsoft has some experience in this space in providing Windows Update, MSDN content , Hotmail , Live services, these services will be ported to the new platform to allow them to self validate the platform, and offer others the ability to out source the data centre services. There will be a short period of time were these services will be free, but in 2009 MS will start to charge for the cloud services based on usage and SLA. Google's cloud based computing has been a serious threat to MS traditional inside the enterprise model, companies have started looking to outsource basic IT, Daily Telegraph recently announced the use of Google for email and office based apps..Its still yet to be seen if large corporations with lots of IPR will ever rely on a third party to hold and manage their data, and this fact plays nicely into Microsoft's offering as they have concentrated on allowing enterprises to utilise the cloud with existing applications inside the enterprise, thus enabling perhaps companies to shift over time, and deciding what is ok to put into the cloud. This is a key advantage they have over the google offering...Other key advantages is that as an MS developer I continue to use the same tools and languages to target the cloud as I do inside the enterprise, and thats huge.

Whilst all that fluffy cloud stuff was interesting, for me the best talk was on C# 4.0 by Anders. Anders is not only a seriously smart guy but a great presenter too. The high lights where

Dynamic language feature support

Co-variance on generic interfaces

Default/Optional parameters

The dynamic language support was very cool.. Microsoft has been working on a DLR ( Dynamic Language Runtime ) for some time to make it easier to write dynamic languages, IronPython and IronRuby utilise common functionality to deliver dynamic language behaviour. C# will now utilise some of that same functionality, by introducing the dynamic keyword. So I can write

dynamic foo = 10

foo = "hello"

foo = 5.6

When you call a method on foo it is late bound, this late binding is done by the fact that foo is of type DynamicObject which has various methods on it like GetMember , InvokeMethod which take a string to represent a Member/Method name. The main aim of this is to allow better interop between staticly typed languages and dynamic ones. Thus calling java script from silverlight is now something like this

<h1 id="title">Hello</h1>

dynamic element = HtmlPage.Document.title;

element.innerText = "Good Bye";

Also COM interop via this mechanism means no PIA's. and a programming model that looks very easy to consume. It also has massive ramifications for Active Record pattern utilised by Ruby on Rails, and for processing XML documents using a dot notation that matches the names of elements. The creation of REST proxies would also seem extremely straight forward.

Support for co-variance List<string> can now be turned into an IEnumerable<object>. Something that has been very frustrating in the past.

Finally we can write C# methods

public int Add( int lhs = 1, int rhs = 1 )

We can therfore call Add(), Add(1) and Add(1,1) to produce the same result or

Add( rhs: 1 , lhs : 1 )

Day 2 is now calling...so hopefully plenty of goodness coming

Wednesday, October 01, 2008

WARNING...Look after your speakers badge

I noticed today that there is a site called http://www.whereisandyclymer.com/ and so I clicked on it...and yo and behold its a series of strange locations featuring my Speakers card from Software Architecture week. A quick whois lookup revealed the culprit a fellow Developmentor instructor....

Think I'll be looking after my speaker badge in future...

Random Sampling extension method

Had reason recently to select a random sample of data from a stream of elements. The amount of samples I needed to take was finite but what was unknown was the number of elements in the input stream. I managed to find various implementations of an IEnumerable extension method on the net, but all the ones I found would cache the entire stream before selecting the sample. This clearly isn't scalable as the input stream increases in size.

After a look around I found this blog article that describes how to implement Reservoir sampling without the need to keep all the items. This then allowed me to write a Random Sample extension method to IEnumerable<T> to do what I needed.

public static IEnumerable<T> RandomSample<T>(this IEnumerable<T> rows, int nSamples)

{

Random rnd = new Random();

T[] sample = new T[nSamples];

int nSamplesTaken = 0;

foreach (T item in rows)

{

if (nSamplesTaken < sample.Length)

{

sample[nSamplesTaken] = item;

}

else

{

// As the amount of samples increases the probability

// of including a value gets less..due to the fact

// that it has a greater chance of surviving if it gets

// placed into the sample over earlier selections

if (rnd.Next(nSamplesTaken) < nSamples)

{

sample[rnd.Next(nSamples)] = item;

}

}

nSamplesTaken++;

}

if (nSamplesTaken >= nSamples)

{

return sample;

}

else

{

return sample.Take(nSamplesTaken);

}

}

Thursday, September 18, 2008

Chrome support for Silverlight

The Beta version of Chrome does not fully support silverlight the app appears to start but no screen updates happen unless you keep resizing the browser. Ive just switched to the Dev branch for Chrome and it appears now to be working .

You can switch via this web site

Wednesday, September 17, 2008

.NET 3.5 SP1 produces a re think of Optimised Lazy Singleton

In the past I've used a version of double check locking in order to implement efficient lazy creation of a singleton in .NET. This I've found to be the most performant implementation. However I've noticed recently that things are changing, when I run the code on a 64 bit CLR I see no difference between a type that has the before field init attribute present on or not. Both get initialised lazily in release mode and extremely quickly. The double check locking on 64 bit CLR is found to be less performant and therefore an unnecessary complication in the implementation.

In addition Ive recently installed .NET 3.5 SP1 and noticed now that there is a change in the 32 bit CLR for type initialisation. Whilst I still get the normal behaviour of before field init, the lazy CLR based initialisation is now extremely quick, almost the same as non lazy initialisation of the type. Again more importantly faster than my optimised double check locking implementation

My conclusion therefore is that moving forward, I'm comfortable in the fact that the very simple of singleton implementations is now acceptable even in cases where I'm aggressively requesting an instance of the singleton.

Below are two implementations of a singleton, the first using optimised locking the second using the CLR type initialisation to ensure lazy initialisation even in a multi threaded environment.

public class OptimisedLazyLogger

{

private static volatile OptimisedLazyLogger instance;

private static object creationLock = new object();

private OptimisedLazyLogger()

{

}

public static OptimisedLazyLogger GetInstance()

{

if (instance == null)

{

CreateInstance();

}

return instance;

}

private static void CreateInstance()

{

// only one thread at any one time

// can be inside this block

lock (creationLock)

{

if (instance == null)

{

instance = new OptimisedLazyLogger();

}

}

}

}

Implementation relying on CLR lazy initialisation

class LazyLogger

{

private static LazyLogger instance;

// Type constructor

static LazyLogger()

{

instance = new LazyLogger();

}

// ensure new can not be used outside the type

// to create an instance

private LazyLogger()

{

Console.WriteLine("Lazy Logger Created");

}

// Public method to allow clients to get to the single

// instance

public static LazyLogger GetInstance()

{

return instance;

}

public void LogMsg(string msg)

{

Console.WriteLine("Logger: {0}" , msg );

}

}

Wednesday, September 03, 2008

Google Chrome, new browser or new desktop os ?

Today I watched the google chrome demo, and I must say it looks awesome. For me its not just a new browser its an attempt to create a desktop os to run web apps. Even the language they use suggests that the overall aim

"We see chrome as more of a window manager"

Other highlights that elude to this are :-

Each tabs run in separate process and sand boxed, even has its own task manager to kill tabs and plugins

Compiled Java Script, allowing you to utilise java script more in your pages

Web Apps can be run without all the normal browser decoration, so they look like a normal desktop app, good uses of this are google calendar and gmail

And to top it all its open source....and works with any search provider...Other cool features Incognita windows allowing you to search the web with out recording the urls in the web history. This proves to be important based on the fact that it utilises browser history to optimise behaviour.

Google have created a desktop web platform that is optimised for searching and running web apps, perhaps in not so distant future all we will care about is having a machine that runs chrome and thats all we care about....Im for one going to be downloading chrome and start using it...

Tuesday, August 19, 2008

Developmentor Pfx Article

I have written an article for Developmentor Developments magazine on the June 2008 CTP of Microsoft Parallel Extensions known as Pfx. To read the article click here

Wednesday, July 30, 2008

Weak Observer

During a recent teach of Code Smarter for Design Patterns in .NET in which we teach the Observer pattern showing both traditional GOF implementation as well as a more appropriate .NET version using events. One student after class told me he has never been keen on encouraging the use of event subscriptions as he was never confident that his developers would remember to unsubscribe from events when they no longer needed them. This is in fact has been a common problem in .NET code that can often induce a memory leak.

The typical case that is often presented is one where a Windows Form subscribes to an event from a different object say some application object. When the form subscribes to the event on the application object, the application object therefore has a strong reference to the form so that it can deliver the events. When the form is closed a failure to unsubscribe from the event means that there will still be a strong reference the form that will ultimately prevent it from being gc'd. If this form has a large data table bound to a data grid this could have seriously impact on available memory during the applications life, as this data set will also not be gc'd

Clearly the right thing is for all programmers to remember to unsubscribe, but we know that often programmers forget and these leaks often go unnoticed until a customer screams. An alternative would be for the application object not to hold a reference and thus will not stop the window from being garbage collected, the obvious problem then is how can it deliver the event. There is though a half way house through the use of a Weak Reference. A Weak reference provides a means to reference an object without preventing it from being GC'd, but still allowing you to get to it whilst the GC'd hasn't decided to remove it.

As I said at the start we provide two implementations of the observer pattern one using interfaces, IObserver/IListener the other using .NET events. Implementing a version of the observer pattern using the interface approach combined with Weak references is relatively trivial extension to the standard observer pattern. The subject does not hold on to the observer/listener using a strong reference it is simply held as a weak reference. When the subject wishes to inform the observers of an event it walks through its list of weak refrerences turning each one into a strong reference for the duration of the notification. If it fails to turn a weak reference into a strong reference it knows the observer has been gc'd.

When attempting to implement this behaviour using events things get a little bit more involved as the delegate chain holds the reference to the observer as a normal strong reference, that is something we can't change. This is a shame since we would clearly love to have the choice to hold on to it as a weak reference, thus giving us a similar solution to Weak Observer. In order to give an event style api we will need to be a bit more cunning, and also take a performance hit. What I have done here is to continue to have the normal event subscribe/unsubscribe using a delegate, but then crack open the delegate to reveal the target instance and target method. Once I have the target method and instance I wrap the instance up in a weak reference wrapped up by what I call a WeakEventHandle. After the construction of the handle it is placed into a handle list. When asked to fire the event each of the handles is visited in turn and the target method is invoked via reflection. Invoking the method via reflection is not optimal in terms of performance and limits the possibilities of running the application with reduced permissions .

private WeakEventMgr<SomeEventArgs> someEvent = new WeakEventMgr<SomeEventArgs>();

public event EventHandler<SomeEventArgs> SomeEvent

{

add { someEvent.Add(value); }

remove {someEvent.Remove(value); }

}

// .....

protected virtual void OnSomeEvent(SomeEventArgs someEventArgs)

{

someEvent.Notify(this, someEventArgs);

}

Above is the code necessary to expose a WeakEvent, each event has its own instance of WeakEventMgr<T>. The event subscribe/unscribe method is explicitly implemented to use WeakEventMgr as the backing store for the subscribers. Finally to maintain the Microsoft pattern for event dispatch a virtual protected method exists to dispatch the event. To make adding weak events easier I created a snippet.

Using one of the implementations of Weak Observer means there is now less need to worry about ensuring that all subscribers have unregistered, since event subscriptions will not keep an object alive.

You can download the code here. The code demonstrates the classic problem of form leakage.

Sunday, July 20, 2008

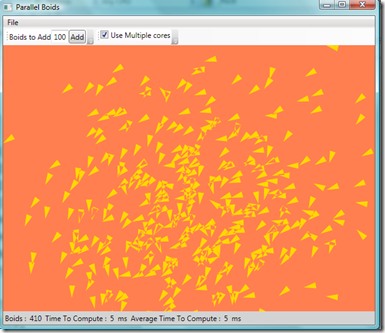

Parallel Boids

The last two weeks Ive found myself immersed in looking at the new Pfx CTP release. I must say it has come on loads since the last drop in December. Some of the issues I spoke about in a previous blog post have now been fixed, things like better algorithms for Parallel.For. Perhaps some of the best addition is the set of concurrent data structures ( cds ).

The main reason for digging into Pfx over the last two weeks was to write an article for the August edition of Developmentor's "development's" monthly news letter, this article attempts to cover the highlights of the recent CTP, so after completing that part of it I had to find some fun use of Pfx, so I ported a developmentor favorite "Boids". Anyone attending a Developmentor Esessntial .NET or Guerilla .NET will have probably encountered this app, but for those not in the know it is a simulation of flocking behaviour.

I took the initial code written by Jason Whittington and started tuning it for performance initially just using one core. The main task was to reduce the number of loops, so instead of having two loops one after each other that iterate over the same set of data I simply combined them, and the second optimisation was to replace foreach with a regular for loop. After that I replaced the main outer for loop with Parallel.For , and viola a reasonable speed up...

I've run the code on my dual core and on an eight way and in both cases observed a reasonable speed up...Not bad considering not a massive amount of effort.

You can download the code from here

Friday, June 06, 2008

Software Architect Week Demos

Had a lot of fun again at Software Architect Week, the demos for the day of Design patterns can be downloaded from here.

Sunday, May 18, 2008

Classic Snake in Silverlight 2

Inspired by Dave Wheeler's article in VSJ magazine, I decided it was time to write a simple game in Silverlight 2. The game I chose was the classic game of Snake.

Whilst there is no doubt Silverlight 2 is a massive step closer to a .NET environment in the browser, there are still a few bits and pieces that trip you up. Some of the collections data structures are not supported, LinkedList, Queue, Stack...there has been an underlying effort to keep the install of the platform as small as possible, so classes that have functionality that can be easily written using other classes have been tossed out.

As for WPF feature parity things have massively improved since we now have controls, not just simple shapes. There is also now support for data binding and resources. Things still trip you up though, transforms don't support data binding and results in less than obvious errors.

You can play the game here and download the source from here

Saturday, May 03, 2008

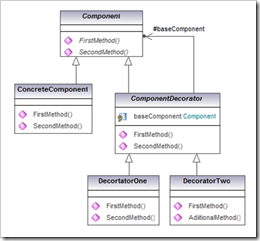

CodeGen for base decorator

One of the patterns in developmentor's "Code Smarter with design patterns in .NET course" is the decorator pattern. The decorator pattern allows you to extend functionality at runtime, this works if the client is written in terms of an abstract type. Using the class hierarchy below the client would be written in terms of the Component class. The code wishing to call the client has been given an instance of ConcreteComponent, but it wants to extend the functionality of that object, in order to do this it must create another object that looks like what the client is expecting. This is where the decorator comes in, the component decorator class below is compatible with the client since it also derives from Component.

The componentDecorator class is a null decorator it simply supports the ability to wrap an object of type Component, and when any of its methods are invoked the call is simply passed on to the wrapped object. Since it has no real functionality it is also declared abstract. Each of the types DecoratorOne and DecoratorTwo overrride only the methods they wish to extend, the methods they do not wish to extend are simply handled by the base class ComponentDecorator and forwarded to the wrapped object. Before calling the client a decorator object is created, and is given the object it is to decorate, in this case something of type component. It is now this decorated object that is passed into the client.

The building of the ComponentDecorator class can become very tedious if the Component class has lots of methods that need to be implemented. This week I finally got around to writing some code that via reflection and the CodeDom classes that will generate the base decorator class, saving a reasonable amount of time and effort.

Thursday, April 03, 2008

Wednesday, April 02, 2008

Generic Sum

Its been a while since I blogged about trying to re-implement C++ STL using .NET generics. Last night I was skimming through an excellent book on Linq "Linq in Action" published by Manning, and whilst reading the section on how Expression trees work in C# v3.0 it occured to me that I could potentially use them for creating a generic Sum method.

First a quick look at C# v3.0 expressions, the lambda expression here is not being turned into a method as would be the norm for anonymous methods but it is turned into an AST. Abstract Syntax Tree used to describe the function of the lambda expression.

| Expression<Func<int, int, int>> addExpr = (lhs, rhs) => lhs + rhs; Func<int, int, int> addMethod = addExpr.Compile(); Console.WriteLine(addMethod(5, 5)); |

In this case that produces a root node to the expression tree that is a Lambda node, that then contains a single Add node, which is a binary operator thus containing two further nodes for the left hand side and right hand side of the add operation.

Reflector spits out the following code

private static void Main(string[] args) Expression.Lambda<Func<int, int, int>>( Expression.Add( CS$0$0000 = Expression.Parameter(typeof(int), "lhs"), CS$0$0001 = Expression.Parameter(typeof(int), "rhs")), new ParameterExpression[] { CS$0$0000, CS$0$0001 } ).Compile()(5, 5)); |

This got me thinking could I build an expression using generic arguments, the code as spit out by reflector certainly looks like its possible. My first crack was as follows

public static T Sum<T>(T[] vals) Expression<Func<T, T, T>> addExpr = (lhs, rhs) => lhs + rhs; T total = default(T); Func<T, T, T> addMethod = addExpr.Compile() foreach (T val in vals) return total; |

Unfortunately this failed, with the typical "Operator + cannot be applied to operands of type T and T" error that has been the reason why you can't easily write generic Sum in .NET. However not put off I built the expression tree by hand

private static Func<T, T, T> CreateAdd<T>() Expression<Func<T, T, T>> addExpr = Expression<Func<T, T, T>>. Lambda<Func<T, T, T>>( new ParameterExpression[] { lhs, rhs });

return addMethod; |

The method above now returns a delegate instance that adds two values both of type T, and returns a value as type T.

Generic Sum can now be rewritten as follows

public static T Sum<T>(T[] vals) T total = default(T); Func<T, T, T> addMethod = CreateAdd<T>(); foreach (T val in vals) return total; |

This now works for arrays for ints, doubles etc. It will not work for types not supported by the Add Expression, so only works for simple numeric types, but it is certainly a lot simpler than the approach I took a year or so ago although not as flexible. Its a shame that the C# compiler expression tree builder won't accept generic arguments since it is possible to represent a expression tree this way, as this would result in a lot easier way to generate such code.

Looking for a C# Design Patterns book

I co-author developmentor's Code smarter with Design Patterns in .NET course with Kevin Jones, and ever since the creation of the course I've been on the look out for a good book to recommend to students. The one I currently recommend is the excellent Head First Design Patterns, although the only rub is its in Java, but in terms of teaching patterns its awesome.

I recently decided to order the latest Design Patterns for C# v3.0 from OReilly written by Judith Bishop, and see if I could recommend that text. Yesterday it arrived, and I skimmed though it looking at the various classic patterns Observer, Template..and my was I shocked...the implementation of some of the patterns is just plane weird, and some of the patterns basic structure is plainly wrong as defined by GOF.

I've not been through the entire catalogue of patterns, but here are some examples.

Template pattern is shown to use composition as opposed to inheritance to bind the template method to the template steps, this is plainly wrong and the implementation is therefore a strategy pattern. As for the strategy pattern examples none of them really effectively show the use of supplying runtime specific behaviour to a subsystem or algorithm.

The observer pattern uses a weird combination of delegates and IObserver interface, its either one or the other, and for .NET is almost certainly pure delegate/event. The is no obvious reason why she has used both interface and delegates/events

The Abstract Factory pattern makes use of a generic interface for the Abstract Factory, but no where in the interface is the generic argument references, and thus is seemingly redundant.

The command pattern mixes the implementation of the invoker with the implementation of the command.

In conclusion I'm not convinced the author either knows the patterns as defined by the GOF, nor is highly proficient in C# v3.0. So my quest continues to find a good C# patterns book I can recommend for class..

Wednesday, February 13, 2008

True Generic Singleton

Last weekend I was teaching Code Smarter with Design Patterns in .NET, yep that's right a weekend...The guys at Intelliflo decided they didn't have time during the regular week for training that wanted me to pack a 4 day course into 3 days over the weekend so we worked from 9 until 19:00...

Whilst teaching them the Singleton pattern they bought out a version they were using built on Generics.

public

class

AlmostSingleton<T> where T:new()

{

private

static T instance;

private

static

object initLock = new

object();

public

static T GetInstance()

{

if (instance == null)

{

CreateInstance();

}

return instance;

}

private

static

void CreateInstance()

{

lock (initLock)

{

if (instance == null)

{

instance = new T();

}

}

}

}

public

class

Highlander : AlmostSingleton<Highlander>

{

public Highlander()

{

Console.WriteLine("There can be only one...");

}

}

static

void Main(string[] args)

{

Highlander highlander = Highlander.GetInstance();

Highlander highlander2 = Highlander.GetInstance();

Debug.Assert(object.ReferenceEquals(highlander, highlander2));

}

A bit of a google around and it appears a few people are advocating similar solutions. On the surface all looks well, but to be really true to the singleton pattern the type which is the singleton should only ever have a single instance. This implementation requires T to have a public constructor, so that whilst it is certainly possible to always get back to a common instance via the GetInstance method, what is lacking and what the singleton pattern strictly requires is the enforcing of only a single instance of the type. Since the fact that the type has a public constructor means that another client could easily create an instance as opposed to calling GetInstance().

static

void Main(string[] args)

{

Highlander highlander = Highlander.GetInstance();

Highlander highlander2 = Highlander.GetInstance();

Debug.Assert(object.ReferenceEquals(highlander, highlander2));

// This will create a second instance of Highlander..

// not what we want support

Highlander highlander3 = new

Highlander();

}

In fact supporting the true singleton behaviour isn't too hard, it simply requires the use of a bit of reflection to create the object instance rather than rely on the typical language new construct.

public

class

Highlander : Singleton<Highlander>

{

private Highlander()

{

Console.WriteLine("There can be only one...");

}

}

public

class

Singleton<T>

{

private

static T instance;

private

static

object initLock = new

object();

public

static T GetInstance()

{

if (instance == null)

{

CreateInstance();

}

return instance;

}

private

static

void CreateInstance()

{

lock (initLock)

{

if (instance == null)

{

Type t = typeof(T);

// Ensure there are no public constructors...

ConstructorInfo[] ctors = t.GetConstructors();

if (ctors.Length > 0)

{

throw

new

InvalidOperationException(

String.Format("{0} has at least one accesible ctor making it impossible to enforce singleton behaviour",

t.Name));

}

// Create an instance via the private constructor

instance = (T)Activator.CreateInstance(t, true);

}

}

}

}

Now any attempt to create the instance outside the Highlander class using the new keyword would produce a compiler error, thus enforcing the true singleton pattern. I've also added a guard to make sure that when you use the Generic wrapper it ensures that you do not have a public constructor which would ultimately allow a client to by bass the singleton functionality.

Thursday, January 31, 2008

Safe Event snippet

This week Im teaching the course I co-wrote with Kevin Jones "Code Smarter with Design Patterns in .NET", as part of the course we introduce the observer pattern and whilst the first part of the deck deals with the basic principles we soon move on to the prefered way of doing it in .NET using delegates/events.

When using events in a multi threaded environment care needs to be taken as whilst register/unregister of the events is thread safe, care needs to be taken when raising the event.

Typically we see code like this

Public class Subject

{

public event EventHandler<EventArgs> Alert;

// ...

protected virtual void OnAlert(EventArgs args)

{

If ( Alert != null )

{

Alert( this , args )

}

}

}

Here a check is being made to make sure the delegate chain has at least one instance, however whilst this works in a single threaded environment in a multithreaded environment there is a race condition between the if and the firing of the event.

To get around this problem, one way is to take a local copy of the delegate reference, and compare that. Since register/unregister events build new lists thus making the register/unregister thread safe. However there is a simpler approach and that is to use the null pattern

public event EventHandler<EventArgs> Alert = delegate { };

This will simple create a delegate instance at the head of the delegate chain which is never removed. Thus its then safe to simply do

Alert( this , args )

Without checking for null, since that can't happen. Ok there is an overhead, but it certainly makes the logic simpler and less prone to occasional hard to find race conditions. Remembering to do this could be a pain so I wrote a simple snippet to do it...So now I simply type safeevent TAB TAB and I always get an event with a null handler...another great use of anonymous methods...

Friday, January 25, 2008

Not so much killer app, but killer content

It used to be the case that a computer/os would succeed if it had the killer app, the app everyone needed. The spreadsheet was one of the first of that kind, now in the new media streaming age perhaps its killer content that will help to push the adoption of new technology. A student told me last week that Microsoft has done a deal so that MSN is the official home of the 2008 Olympic, the site will provide hours and hours of online coverage, bought to you by Silverlight. Could there be any better vehicle to get Silverlight plugin onto every home/office pc..

Monday, January 21, 2008

The potential danger of too much access to too much data

The UK government is set to build a series of large scale databases containing children's data. The charity Action on Rights for Children has put together a three part video detailing some of their concerns to this scheme.

http://www.youtube.com/watch?v=LRQr2VrtX-0

http://www.youtube.com/watch?v=KH-1IumXZbI

http://www.youtube.com/watch?v=IyXCSg-lRkA

Friday, January 18, 2008

Ensure local transaction snippet

Whilst teaching developmentors enet 3 class this week I had an idea for a new snippet. It came to me whilst teaching the deck on System.Transactions, I was in the middle of demonstrating to them how you need to be really careful not to cause a transaction to be promoted to the DTC ( See Previous Blog Article ). Two strategies I suggest are

- Stop the DTC, net stop MSDTC

- Add an Debug.Assert statement inside your TransactionScope block to ensure the current transaction is not a distributed transaction, by checking that the DistributedIdentifier is an empty GUID.

The first option is reasonable if you never need the DTC, but if you do have bits of code that does need the DTC its clearly not an option. The second approach is a bit tedious ut if you use a snippet, then its not all that bad. Below is the snippet code..

<CodeSnippet

Format="1.0.0"

xmlns="http://schemas.microsoft.com/VisualStudio/2005/CodeSnippet">

<Header>

<Title>Local Transaction</Title>

<Author>Andrew Clymer</Author>

<Shortcut>lscope</Shortcut>

<Description>Creates a transaction scope and ensures at the end of the block that the transaction is only a local transaction </Description>

<SnippetTypes>

<SnippetType>SurroundsWith</SnippetType>

<SnippetType>Expansion</SnippetType>

</SnippetTypes>

</Header>

<Snippet>

<Declarations>

<Literal>

<ID>scope</ID>

<Default>scope</Default>

</Literal>

</Declarations>

<Code

Language="CSharp">

<![CDATA[

using (TransactionScope $scope$ = new TransactionScope())

{

$selected$

$end$

Debug.Assert(Transaction.Current.TransactionInformation.DistributedIdentifier == Guid.Empty,

"Unexpected!! Transaction is now distributed");

}

]]>

</Code>

</Snippet>

</CodeSnippet>

Sunday, January 13, 2008

Pfx, Parallel Extensions take advantage of multiple cores, but be careful...

Microsoft has now made available a parallel library for .NET, its still in early stages with its aim is to make it easier to write applications to take advantage of multiple cores. Pfx has really two layers one which offers a relatively high level of abstraction, providing a Parallel.For, Parallel.Do and parallel LINQ and a lower level layer allowing programmers to schedule individual Tasks.

This has been a long time coming, .NET support for concurrency is pretty primitive even in .NET 3.0. Simple things like kick of N tasks and wait for them all to complete requires a reasonable amount of code, but on the surface Pfx looks to assist here. I spent a couple of days over xmas looking at it and understanding how it works.

So first task was to find some code I wanted to parallelise I frequently use a piece of logic I stole from the book "How long is a piece of string" that uses many iterations to work out the value of PI as a good async demo, so I thought I would have a go at parallelising this piece of code.

private

const

int N_ITERATIONS = 1000000000;

private

static

double CalcPi()

{

double pi = 1.0;

double multiply = -1;

for (int nIteration = 3; nIteration < N_ITERATIONS; nIteration += 2)

{

pi += multiply * (1.0 / (double)nIteration);

multiply *= -1;

}

return pi * 4.0;

}

Looking at the algorithm it doesn't look that complicated all I need to do is to make each core do a different part of the for statement and then combine the results of each core and multiple by 4.0. In fact Ive done this using the basic BeginInvoke functionality before so I know it scales...

So I first opted for the high level approach offered by Pfx called Parallel.For. Which it states allows me to spread loop iterations across multiple cores. In other words nIteration in my case could be 3 on one core and 5 on another at the same time.

private

static

double CalcPiSimpleParallel()

{

double pi = 1.0;

object sync = new

object();

//for (int nIteration = 3; nIteration < 1000000000; nIteration += 2)

Parallel.For<double>(0, N_ITERATIONS / 2 , 1, () => { return 0; }, (nIteration, localPi) =>

{

double multiply = 1;

// 3 5 7 9 11

if (nIteration % 2 == 0 )

{

multiply = -1;

}

localPi.ThreadLocalState += multiply * (1.0 / (3.0 + (double)nIteration* 2.0 ));

},

(localPi) => { lock (sync) { pi += localPi; } });

return pi * 4.0;

}

This unfortunately this did not achieve the results I had hoped for...it in fact took twice as long...Bizare...Ok so not suprising since it is using delegates. Using reflector you see that Parallel.For is using the underlying Task class provided by Pfx.

A Task is a piece of work that you give to a task manager and it queue's for an available thread. Now the first thing to mention is that tasks do not run on thread pool threads, a task manager has its own pool of threads which it controls depending on the number of available cores and if the tasks running are currently blocked.

Task task = Task.Create((o) =>

{

Console.WriteLine("Task Running..");

} );

task.Wait();

The code above will create a new task, and it will be run on one of the default Task Managers threads, and the main thread will wait for its completion.

Parallel.For, uses a special variant of Task called a replicating task, which means when you create this task the task manager will effectively not create a single instance of the task but in fact as many instances as there are cores. Each of these task clones are then expected to work in harmony to achieve the overall task. A typical implementation might therefore have an outerloop looking for a new piece of work to do in the overall task, when no more work it simply ends the task.

In the case of a Parallel.For you could expect the outerloop to simply perform an Interlocked.Increment on the loop counter until the required value is reached, and then inside the loop to simply invoke the required piece of work, and here lies the problem in my example in that the piece of work that is executing inside the for loop is extremely trivial and doesn't take that many cycles, and so the overhead of invoking a delegate for each iteration is having a large impact on the overall performance.

So first word of warning when using Pfx, you need to make sure that the piece of work inside a given task is of a reasonable size in order for it to actually scale. Ok so it's not as trivial as it first looks to parallelise the calc Pi method. Not put off I refactored the code to make the algorithm have an inner loop, thus increasing the amount of work for each parallel for iteration. To start with I simple broke it down into ten blocks, thinking each block would now be a reasonable amount of work and we would be only paying the overhead of a function call 10 times, as opposed to N_ITERATIONS/2

private

static

double CalcPiDualLoop()

{

double pi = 1.0;

object sync = new

object();

int stride = N_ITERATIONS / 2 / 10;

//for (int nIteration = 3; nIteration < 1000000000; nIteration += 2)

Parallel.For<double>(0, N_ITERATIONS / 2, stride, () => { return 0; }, (nIteration, localPi) =>

{

double multiply = 1;

// 3 5 7 9 11

if (nIteration % 2 == 0)

{

multiply = -1;

}

double piVal = 0;

for (int val = nIteration; val < nIteration + stride ; val++)

{

piVal += multiply * (1.0 / (3.0 + (double)val * 2.0));

multiply *= -1;

}

localPi.ThreadLocalState += piVal;

},

(localPi) => { lock (sync) { pi += localPi; } });

return pi * 4.0;

}

But only partial luck, I got a less than 10% speed up...not what I was expecting. So I had another look at the implmentation of Parallel.For to understand why, and digging into it you realise that each of the task created by parallel for, doesn't just take the next value in the loop and work on that, but takes the next eight values. So what this means is that in my case I have an 2 core CPU, the first task to run takes the first 8 iterations of the loop to work on, and the second task gets just 2. The second task completes its work, and since there is no more ends, leaving the first task to complete its remaining 6 all running on one core, leaving my other core cold.

To make sure I wasn't seeing things I made the number of itterations of Parallel a factor of 8 * number of cores, and hey presto it took just over half the time.

I can see that taking chunks of work at a time reduces contention for work allocation, but Im struggling to see why this would be neccessary since this contention will not be that great especically when in order to make Parallel.For to work efficiently each iteration needs to be of a reasonable size. I really hope they address this issue when they finally release the library, since having to know how many cores you are running on and magic number 8 just seems rather odd and goes against what I believe they were trying to achieve.

I also implemented the method using the low level parallel task library and that scales beautifully too..

private

static

double ParallelCalcPi()

{

object sync = new

object();

int nIteration = 3;

double pi = 1;

int stride = N_ITERATIONS / 4;

Task task = Task.Create((o) =>

{

Console.WriteLine("Running..{0}", Thread.CurrentThread.ManagedThreadId);

double localPi =0.0;

double multiply = -1;

long end;

while ((end = Interlocked.Add(ref nIteration, stride)) <= N_ITERATIONS + 3)

{

//Console.WriteLine("Next Chunk {0} from {1} to {2}", Thread.CurrentThread.ManagedThreadId,

// end - stride, end);

for (int val = (int) (end - stride); val < end; val += 2)

{

localPi += multiply * (1.0 / (double)val);

multiply *= -1;

}

}

lock (sync) { pi += localPi; }

}, TaskCreationOptions.SelfReplicating);

task.Wait();

return pi * 4.0;

}

First impressions is that the Task Library seems ok, but the Parallel.For seems ill thought out. I have also done some experiments with Parallel.Do which allows me to kick of multiple tasks, by supplying a series of Action delegates as parameters. Parallel.Do then blocks waiting for them to complete. Unfortuantly no timeout parameter is present whih means it waits forever, so if an action goes into a rogue state and never completes, your thread that invoked Parallel.Do will hang, not desirable....So my second request is that they add a timeout parameter.

About Me

- Andy Clymer

- Im a freelance consultant for .NET based technology. My last real job, was at Cisco System were I was a lead architect for Cisco's identity solutions. I arrived at Cisco via aquisition and prior to that worked in small startups. The startup culture is what appeals to me, and thats why I finally left Cisco after seven years.....I now filll my time through a combination of consultancy and teaching for Developmentor...and working on insane startups that nobody with an ounce of sense would look twice at...